Hello Again.

I have successfully deployed 2 Kaltura AIO recently and now I wanted to deploy a fully functional clustered Kaltura. I have followed the instructions here:

Everything is fin in principle.

I deployed two for each component:

10.0.2.10 db-back1

10.0.2.11 db-back2

10.0.2.12 sphinx1

10.0.2.13 sphinx2

10.0.2.14 front1

10.0.2.15 front2

10.0.2.16 batch1

10.0.2.17 batch2

10.0.2.18 dwh1

10.0.2.19 dwh2

10.0.2.20 vod1

10.0.2.21 vod2

I have the haproxy as per the reference configuration, with the obvious changes, here: platform-install-packages/haproxy.cfg at master · kaltura/platform-install-packages · GitHub

All machines can ping each other, and there is no firewall. SElinux is disabled.

In front there is an HAproxy.

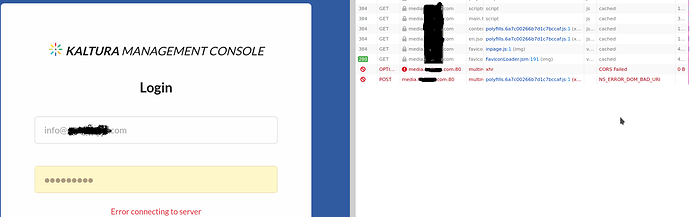

The obvious first issue I have is that when I try to login to the admin console I get an login error . RC : 301.

Now, in the first batch host I see:

--

==> /opt/kaltura/log/batch/validatelivemediaservers-0-2021-04-28.err.log <==

PHP Fatal error: Uncaught exception 'KalturaClientException' with message 'failed to unserialize server result

' in /opt/kaltura/app/batch/client/KalturaClientBase.php:401

Stack trace:

#0 /opt/kaltura/app/batch/client/KalturaClient.php(2401): KalturaClientBase->doQueue()

--

2021-04-28 21:56:07 [0.004964] [1159369195] [8] [BATCH] [KalturaClientBase->doQueue] NOTICE: result (serialized):

2021-04-28 21:56:07 [0.000155] [1159369195] [9] [BATCH] [KScheduleHelper->run] ERR: exception 'Exception' with message 'System is not yet ready - ping failed' in /opt/kaltura/app/infra/log/KalturaLog.php:88

Stack trace:

#0 /opt/kaltura/app/batch/batches/KScheduleHelper.class.php(42): KalturaLog::err('System is not y...')`

This got me thinking. How does it know where to go? There is nno metion of ANY batch host in /etc/kaltura.d/system.ini after all…

Then questions started to flood:

- Does the DWH need to have its own DB?

- How does each component know where to go?

There are some oviuos ones:

DB is replicated ( and I guess that can be LBd too )

Front is LBd

But then…

Sphinx seems to be by config ( but how does it know where the second one is ?)

Same for Batch, the instructions say it writes itself on the DB… but how does it know where to find it? via API?

What about elasticsearch? Can’t it run in a separate host?

Finally, for VOD, where is it specified where to find it? My system.ini only had:

PRIMARY_MEDIA_SERVER_HOST=

Although in my answers file I added:

VOD_PACKAGER_HOST="vod1"

So, after success with AIO, now I find new challenges in a clustered Kaltura. I am trying to find official documentation for kaltura, but aside from instructions in GitHub, there seems to be nothing at all. Please correct me, I am more than happy to read and go through documentation for this type of set-up, if theres any.