Hello @Sakurai,

I think the Kaltura system consists of the following server features:

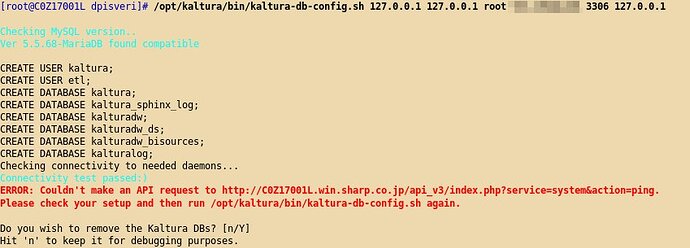

front node (apache, kaltura-front, etc.)

sphinx node (kaltrua-sphinx, kaltura-populate, kaltura-elasticsearch, etc.)

streaming server (kaltura-nginx, etc.)

bath server (kaltura-batch, etc.)

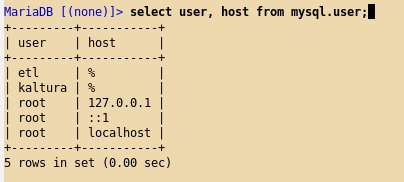

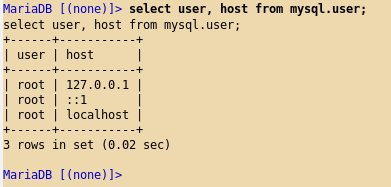

database server (mysql or mariadb, etc.)

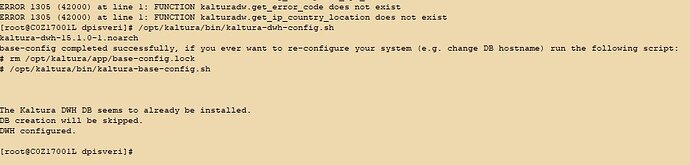

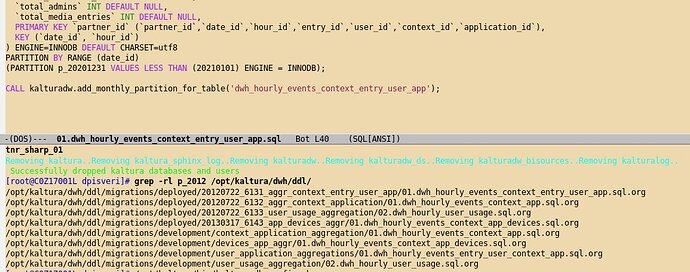

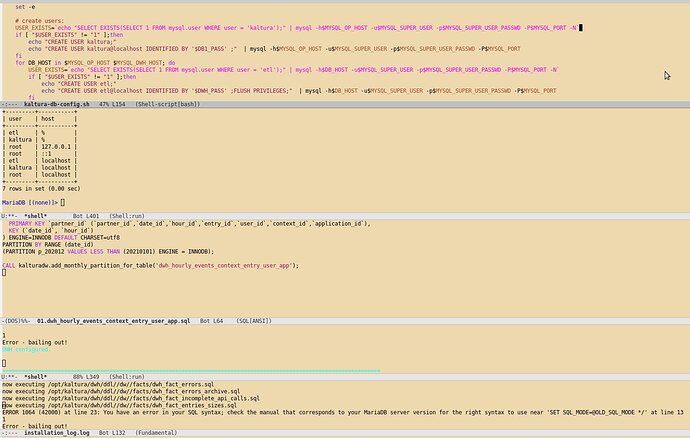

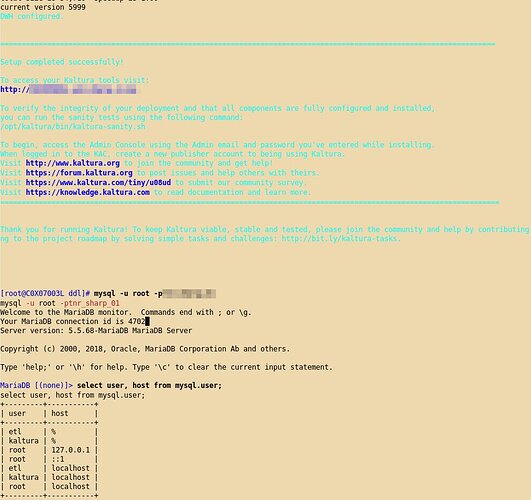

data-warehouse (kaltura-dwh, kaltura-pentaho, etc.)

administrator server (kaltura-front, etc.)

An all-in-one server contains all the elements.

On the other hand, you can use a separate server for each element.

Load of video uploads and transcoding reduces delivery performance.

As a simple cluster, separate the front node from the other elements.

One server has the front node feature, another server has rest features.

If you want to further improve the delivery performance, create two front nodes.

At this point, Kaltura cluster consists of three servers.

However, a proxy server (load balancer, or CDN host) is required when installing multiple front nodes.

In such cases, the Kaltura cluster consists of four servers (proxy server, two front nodes, backend server).

Multiple Sphinx nodes and batch servers can be installed.

And, Multiple streaming servers can be installed (require proxy server).

Therefore, it is possible to build a cluster corresponding to the server resources available to you.

Note that, if you build the Kaltura cluster, “/opt/kaltura/web” directory should be shared between servers.

Therefore, NFS server is also required.

Regards,