Hello @t-saito

I have confirmed the screen display as follows.

Configuring your Kaltura DB…

…

Checking MySQL version…

Ver 5.5.68-MariaDB found compatible

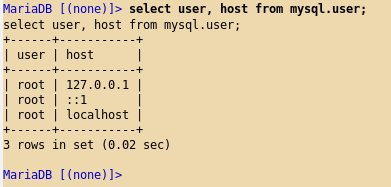

CREATE USER kaltura;

CREATE USER etl;

CREATE DATABASE kaltura;

CREATE DATABASE kaltura_sphinx_log;

CREATE DATABASE kalturadw;

CREATE DATABASE kalturadw_ds;

CREATE DATABASE kalturadw_bisources;

CREATE DATABASE kalturalog;

Checking connectivity to needed daemons…

Connectivity test passed:)

Cleaning cache…

Populating DB with data… please wait…

Output for /opt/kaltura/app/deployment/base/scripts/installPlugins.php being logged into /opt/kaltura/log/installPlugins.log

Output for /opt/kaltura/app/deployment/base/scripts/insertDefaults.php being logged into /opt/kaltura/log/insertDefaults.log

Output for /opt/kaltura/app/deployment/base/scripts/insertPermissions.php being logged into /opt/kaltura/log/insertPermissions.log

Output for /opt/kaltura/app/deployment/base/scripts/insertContent.php being logged into /opt/kaltura/log/insertContent.log

Generating UI confs…

…

and

…

Deploying analytics warehouse DB, please be patient as this may take a while…

Output is logged to /opt/kaltura/dwh/logs/dwh_setup.log.

sending incremental file list

MySQLInserter/

MySQLInserter/TOP.png

MySQLInserter/mysqlinserter.jar

MySQLInserter/plugin.xml

sent 2,647,329 bytes received 77 bytes 5,294,812.00 bytes/sec

total size is 2,646,419 speedup is 1.00

sending incremental file list

MappingFieldRunner/

MappingFieldRunner/MAP.png

MappingFieldRunner/mappingfieldrunner.jar

MappingFieldRunner/plugin.xml

sent 90,966 bytes received 77 bytes 182,086.00 bytes/sec

total size is 90,670 speedup is 1.00

sending incremental file list

GetFTPFileNames/

GetFTPFileNames/FTP.png

GetFTPFileNames/getftpfilenames.jar

GetFTPFileNames/plugin.xml

sent 7,310,942 bytes received 77 bytes 14,622,038.00 bytes/sec

total size is 7,308,893 speedup is 1.00

sending incremental file list

FetchFTPFile/

FetchFTPFile/FTP.png

FetchFTPFile/fetchftpfile.jar

FetchFTPFile/plugin.xml

sent 5,784,795 bytes received 77 bytes 11,569,744.00 bytes/sec

total size is 5,783,119 speedup is 1.00

sending incremental file list

DimLookup/

DimLookup/CMB.png

DimLookup/lookup.jar

DimLookup/plugin.xml

sent 3,689,118 bytes received 77 bytes 7,378,390.00 bytes/sec

total size is 3,687,964 speedup is 1.00

sending incremental file list

UserAgentUtils.jar

ksDecrypt.jar

sent 54,896 bytes received 54 bytes 109,900.00 bytes/sec

total size is 54,710 speedup is 1.00

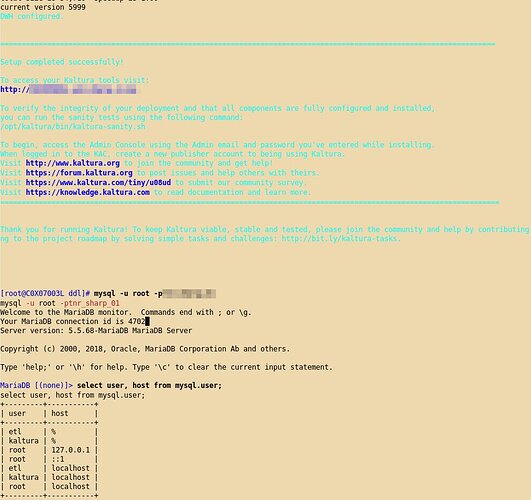

current version 5999

DWH configured.

…

Regards,